Advanced Deployment of Ghost in 2 minutes with Docker

At this point, if you look closely, it should come as no surprise that my blog is now powered by Ghost, it's also deployed inside a Docker container in a DigitalOcean droplet.

I've been thinking about my setup and how easy it was to get it running, I figured I might want to write about it. There are plenty of tutorials out there on how to deploy this blogging platform, but my configuration and hence this article it's a little more advanced, since it attempts to cover areas many of those don't. It's also an incredibly fast process (so fast that describing it it's miles slower than actually doing it), a testament of what Docker brings for application deployment.

I'm hoping a walkthrough of the steps, in form of a tutorial, would be beneficial to a broader audience. Specifically, this article will be be covering:

- Docker Compose.

- Proper reverse proxying with Nginx, including virtual hosts, SSL and redirects.

- 2-factor app configuration for Ghost.

- Database storage besides SQLite (MariaDB here).

- Backups.

Deploying Ghost

All set, let's start this tutorial by taking a look at our directory structure.

Our directory structure

Many hosted Ghost users manage/fork/copy their Ghost installation inside their site. Mine is incredibly simple, as you'll see:

blackxored@adrian-mbp ~/Code/site $ tree -L 1

.

├── adrianperez.org.crt

├── adrianperez.org.key

├── config.env

├── config.js

├── content

└── docker-compose.yml

1 directory, 6 filesWe don't need to have our Ghost installation bundled within our site, which

gives up the added benefit that upgrades become a ton easier. In fact, the only

directory that matches that of a Ghost installation is the content directory,

and the reason it exists it's because a custom theme is used.

You could notice that we have everything necessary to run our site nevertheless, our Ghost configuration, SSL certificates, custom themes and our Docker image definition; each of them will be described in detail.

Creating our image definition

Our image definition is powered by Docker Compose, allowing us to have multiple images, specify our dependencies and essentially describe our application in YAML.

db:

image: mariadb

ports:

- 3306:3306

volumes_from:

- adrianperez_org-data

env_file:

- config.env

blog:

image: ghost

volumes_from:

- adrianperez_org-data

links:

- db

env_file:

- config.env

ports:

- "2368:2368"As the above points out, we have 2 images, our database and our blog itself (we don't need to define a "web" image as you'll see shorty), per best-practices, that will run on separate containers.

We're using MariaDB (a MySQL fork) for our database, and we're linking it to a

data volume (adrianperez.org-data). Our database data, as well as Ghost's will

be living on that volume. Having our data in separate volume ensures that data

will persist across container destroys, is also a best-practice, and it makes

backups significantly easier, as you'll see.

Defining our configuration

Now let's move to our Ghost configuration itself.

In a 2-Factor-App fashion, we will be relying on environment variables to configure our application, so we will refactor the default configuration file that comes with Ghost to leverage this flexibility.

Let's take a look at config.js:

var config,

url = require("url"),

path = require("path");

function getDatabase() {

var db_config = {};

if (process.env["DB_CLIENT"]) {

db_config["client"] = process.env["DB_CLIENT"];

} else {

return {

client: "sqlite3",

connection: {

filename: path.join(process.env.GHOST_CONTENT, "/data/ghost.db")

},

debug: false

};

}

var db_uri = /^tcp:\/\/([\d.]+):(\d+)$/.exec(process.env["DB_PORT"]);

if (db_uri) {

db_config["connection"] = {

host: db_uri[1],

port: db_uri[2]

};

} else {

db_config["connection"] = {

host: process.env["DB_HOST"] || "localhost",

port: process.env["DB_PORT"] || "3305"

};

}

if (process.env["DB_USER"])

db_config["connection"]["user"] = process.env["DB_USER"];

if (process.env["DB_PASSWORD"])

db_config["connection"]["password"] = process.env["DB_PASSWORD"];

if (process.env["DB_DATABASE"])

db_config["connection"]["database"] = process.env["DB_DATABASE"];

return db_config;

}

function getMailConfig() {

var mail_config = {};

if (process.env.MAIL_HOST) {

mail_config["host"] = process.env.MAIL_HOST;

}

if (process.env.MAIL_SERVICE) {

mail_config["service"] = process.env.MAIL_SERVICE;

}

if (process.env.MAIL_USER) {

mail_config["auth"]["user"] = process.env.MAIL_USER;

}

if (process.env.MAIL_PASS) {

mail_config["auth"]["pass"] = process.env.MAIL_PASS;

}

return mail_config;

}

if (!process.env.URL) {

console.log("Please set URL environment variable to your blog's URL");

process.exit(1);

}

config = {

production: {

url: process.env.URL,

database: getDatabase(),

mail: getMailConfig(),

server: {

host: "0.0.0.0",

port: "2368"

},

paths: {

contentPath: path.join(process.env.GHOST_CONTENT, "/")

}

},

development: {

url: process.env.URL,

database: getDatabase(),

mail: getMailConfig(),

server: {

host: "0.0.0.0",

port: "2368"

},

paths: {

contentPath: path.join(process.env.GHOST_CONTENT, "/")

}

}

};

module.exports = config;It's a long configuration, I acknowledge, but now everything could be

dynamically configured, we've also made some adjustments related to the Docker

Ghost image itself (notice GHOST_CONTENT references).

Our config.env specifies our blog's URL, database engine to use and it's

parameters, as well as the Node environment to run under (you'd be surprised on

how many people run Ghost on development).

DB_CLIENT=mysql

MYSQL_USER=blog

MYSQL_PASSWORD=<REDACTED>

MYSQL_ROOT_PASSWORD=<REDACTED>

MYSQL_DATABASE=blog

URL=https://adrianperez.org

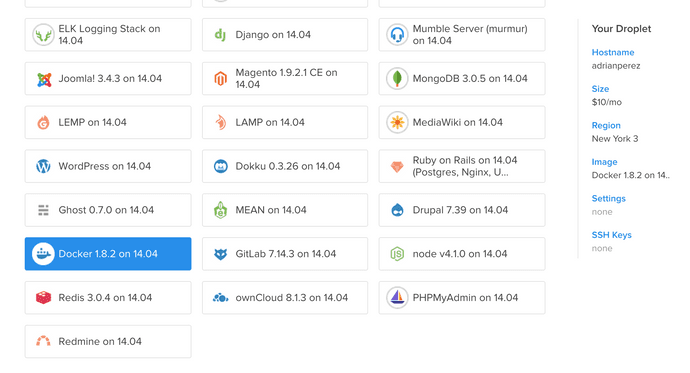

NODE_ENV=productionCreating our droplet in DigitalOcean

Now we're moving to deployment itself, and we will be using DigitalOcean for it.

We need to create a new droplet, and they conveniently provide a Docker image themselves, otherwise we would need to install Docker by ourselves (which is easy, but definitely boilerplate).

Creating a data volume

After we create and login to our host, we need to create that data volume we talked about that will hold our application and DB data:

docker create --name adrianperez.org-data -v /var/lib/mysql -v /var/lib/ghost busyboxThis volume maps the necessary directories, /var/lib/ghost and

/var/lib/mysql that will be mounted in our blog image.

Starting the Nginx proxy service

We will be exposing our blog to the world through Nginx, but we won't create a

separate image for it, nor configure it by hand. We'll take advantage of

nginx-proxy instead. While we're at it, we also copied our SSL certificates to

/root/certs. Notice that by convention the certificates are named like our

site.

docker run -d -p 80:80 -p 443:443 -v /var/run/docker.sock:/tmp/docker.so:ro -v /root/certs:/etc/nginx/certs jwilder/nginx-proxyAbove we're running yet another Docker image, specifying that we will listen for

HTTP and HTTPS requests, and we will grab SSL certificates from /root/certs

(which gets mounted as /etc/nginx/certs).

The proxy by itself doesn't run our site, you're wondering. So we'll do that next.

Running our proxied Docker container

Now it's time to run our site container itself:

docker run -d -e VIRTUAL_HOST=adrianperez.org,blog.adrianperez.org,www.adrianperez.org --env-file config.env --volumes-from adrianperez.org-data ghostThe VIRTUAL_HOST env var is pretty self-explanatory, we tell our proxy all the

hosts our site should respond to.

Now assuming our DNS is setup properly (just point it to your droplet's address) we're ready to access our site.

Since we configured HTTPS, we also get 301 REDIRECT for free if we access it

through HTTP, neat.

Backing up

Backing up our data is incredibly easy with Docker and data volumes. We mount our data directory into an (almost) empty image and backup the directories themselves.

root@adrianperez:~# docker run --rm -i --volumes-from adrianperez.org-data busybox tar cvf - /var/lib/mysql /var/lib/ghost | gzip > ~/adrianperez.org-data-`date +%s`.tgzConclusion

I hope you agree that the process of deploying Ghost is incredibly easy and powerful when it's coupled with Docker and DigitalOcean, and that you liked this tutorial. Let me know in the comments below.